J’ai eu la chance d’intervenir à l’atelier Actions de tutorat innovantes lors aux quartiers du numériques le mardi 15 octobre 2024 à l’Université Paris Sciences Lettres (PSL) pour une journée consacrée à l’exploration des pratiques innovantes qui façonnent l’enseignement numérique.

Merci à Vincent Beillevaire, Marie Zocli, Solange Faria Pereira, Amandine Rannou et Joel Oudinet de m’avoir accueilli dans leur panel !

Voici un petit compte rendu de ce que nous avons présenté autour de notre module d’IA intégré à ChallengeMe.

Cette journée dédiée à l’exploration des pratiques innovantes dans l’enseignement numérique a été l’occasion parfaite pour expliquer pourquoi nous avons fait le choix d’ajouter l’IA dans notre plateforme.

Notre décision d’intégrer l’IA à notre plateforme est motivée par plusieurs facteurs :

Enfin, notre décision d’intégrer l’IA s’inscrit dans notre engagement à préparer les étudiants pour un avenir où l’IA sera omniprésente. En exposant les étudiants à des outils d’IA dans un contexte éducatif, nous les aidons à développer les compétences nécessaires pour interagir avec ces technologies de manière critique et productive. Lors de notre intervention à PSL, nous avons souligné que notre approche de l’IA reste centrée sur l’humain. L’IA est un outil puissant, mais elle ne remplace pas le jugement humain. Elle est là pour augmenter les capacités des enseignants et des étudiants, pas pour les supplanter.

Notre philosophie est claire : nous utilisons l’IA de manière ciblée et encapsulée, c’est-à-dire en l’intégrant à des fonctionnalités spécifiques de notre plateforme, avec des objectifs clairement définis.Cette approche « encapsulée » nous permet de tirer le meilleur parti de l’IA tout en gardant le contrôle sur son utilisation. Nous ne cherchons pas à créer une IA omnisciente qui prendrait en charge tous les aspects de l’apprentissage. Au contraire, nous développons des outils d’IA précis, conçus pour répondre à des besoins spécifiques identifiés par nos utilisateurs et partenaires académiques.

J’ai insisté sur le fait que dans tous ces cas d’usage, l’IA vient en support des utilisateurs (étudiants, enseignants, tuteurs) et non en remplacement. Elle est là pour faciliter, optimiser et enrichir les interactions humaines, qui restent au cœur de l’expérience d’apprentissage sur ChallengeMe.

La flexibilité de notre plateforme en termes de types de tuteurs, une caractéristique particulièrement appréciée par les écoles utiliasant ChallengeMe. Nous distinguons plusieurs profils :

Cette diversité des profils permet une évaluation riche et multidimensionnelle, alliant les bénéfices de l’évaluation par les pairs à ceux de l’évaluation par des experts

J’ai insisté sur le fait que l’IA vient en support du tuteur dans ces différentes missions, en lui fournissant des outils pour optimiser son action, mais ne se substitue jamais à lui.

J’ai mis en lumière les deux défis majeurs de l’évaluation par les pairs : donner un feedback pertinent et recevoir puis appliquer ce feedback.

Formuler un retour constructif et utile est une compétence complexe pour de nombreux étudiants. Les principaux écueils sont :

Pourtant, cette compétence est essentielle, non seulement dans le parcours académique, mais aussi dans la vie professionnelle future.

Tout aussi crucial et délicat est l’art de recevoir et d’exploiter efficacement le feedback. Les étudiants peuvent rencontrer plusieurs obstacles :

Or, cette capacité à intégrer les retours est fondamentale pour permettre une progression continue et développer une posture réflexive sur son propre travail.Ces deux défis sont au cœur de l’évaluation par les pairs. Les relever nécessite un accompagnement pédagogique adapté, mais aussi des outils technologiques bien pensés pour faciliter le processus sans dénaturer l’interaction humaine.

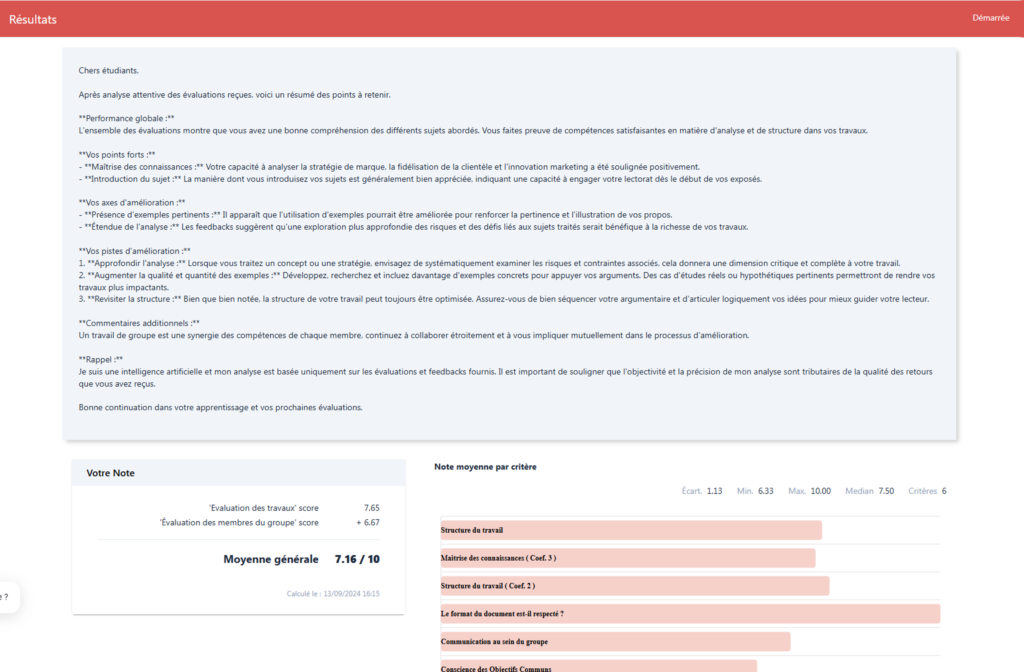

Chez OMNES Education, nous avons déployé une fonctionnalité d’IA qui analyse et synthétise les feedbacks reçus par un étudiant. Cette innovation vise à optimiser le processus d’évaluation par les pairs en rendant les retours plus accessibles et actionnables.

Après qu’un étudiant a rendu un travail et reçu des évaluations de ses pairs, notre IA entre en action :

Voici un exemple:

Cette synthèse IA offre plusieurs avantages :

Cette synthèse IA permet à l’étudiant de comprendre rapidement et clairement les messages clés des feedbacks reçus, sans être submergé par un grand volume d’informations. Elle lui donne également des pistes concrètes pour progresser, favorisant ainsi une démarche d’amélioration continue.

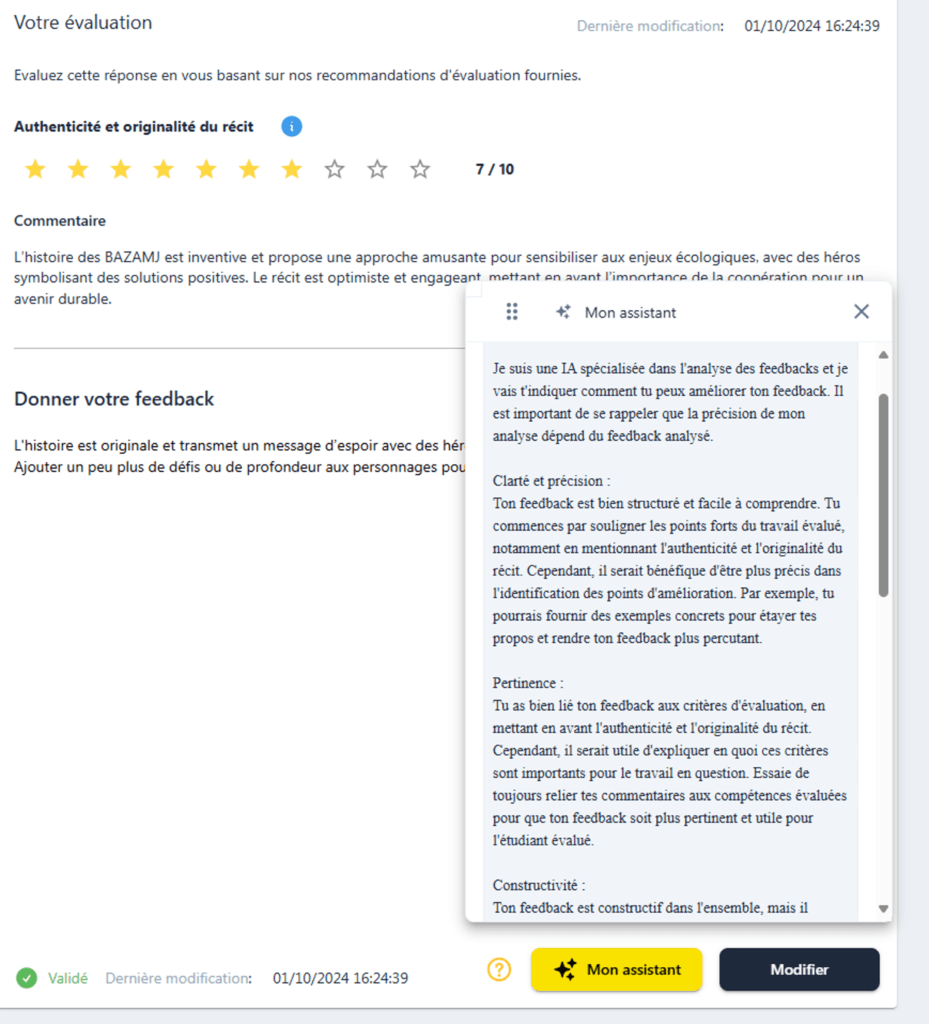

nous avons déployé un assistant IA innovant qui guide les étudiants tout au long du processus de rédaction de feedback. Cet outil s’appuie sur les critères d’évaluation établis par l’UC Louvain et sur des recherches approfondies dans le domaine de l’évaluation par les pairs (M. Gielen, B. De Wever (2015), Structuring the peer assessment process: a multilevel approach for the impact of product improvement and peer feedback quality, Journal of computer assisted learning, 31(5), 435-449)

L’assistant IA analyse le feedback fourni par l’étudiant ainsi que les notes attribuées à son pair. Il compare ensuite ces éléments aux critères de qualité prédéfinis. Sur cette base, il génère des suggestions personnalisées pour améliorer le feedback. Par exemple, si l’étudiant a donné une note basse sans fournir de justification détaillée, l’assistant pourrait suggérer : « Votre évaluation semble sévère. Pourriez-vous développer davantage vos observations pour aider votre pair à comprendre les raisons de cette note ? » De même, si le feedback manque de suggestions concrètes d’amélioration, l’IA pourrait proposer : « Essayez d’inclure des recommandations spécifiques pour chaque point faible identifié. » Cette approche permet non seulement d’améliorer la qualité des feedbacks fournis, mais aussi de développer les compétences critiques et communicatives des étudiants. En les guidant vers des pratiques d’évaluation plus constructives et réfléchies, nous préparons les étudiants à devenir des professionnels capables de donner et recevoir des feedbacks de manière efficace dans leur future carrière.

Voici un exemple:

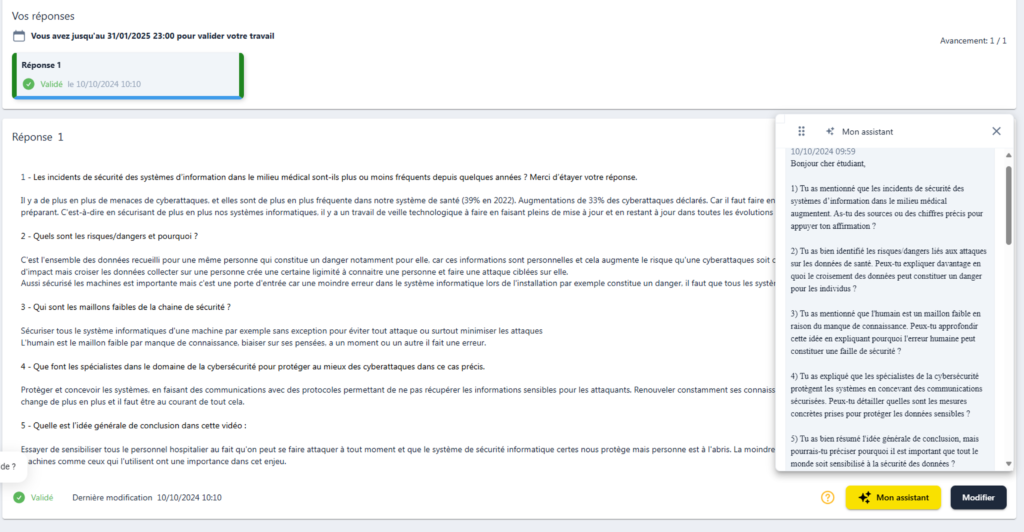

À l’Université de Montpellier, nous avons déployé un assistant IA innovant qui analyse le travail produit par l’étudiant et lui propose des axes d’amélioration basés sur la grille critériée fournie par l’enseignant. L’objectif est de permettre à l’étudiant de comprendre précisément les attentes et de s’améliorer de manière autonome. Notre assistant IA intervient après que l’étudiant a soumis son travail. Il compare le travail rendu aux critères d’évaluation définis par l’enseignant. Pour chaque critère non entièrement satisfait, l’IA génère des suggestions concrètes d’amélioration, en s’appuyant sur les bonnes pratiques du domaine. Elle peut également recommander des ressources pédagogiques pertinentes (articles, vidéos, exercices) pour aider l’étudiant à progresser sur ces points spécifiques. Les premiers retours de l’Université de Montpellier sont très encourageants. Les étudiants apprécient cette aide personnalisée qui leur permet de mieux comprendre les attentes des enseignants et de savoir comment améliorer leur travail de manière ciblée. Les enseignants notent une meilleure adéquation des travaux rendus avec les critères d’évaluation et une progression plus rapide des compétences des étudiants.

Voici un exemple:

Forts de ces premiers succès, nous sommes ravis d’annoncer notre collaboration renforcée avec l’Université de Montpellier et leur laboratoire de recherche pour développer de nouvelles fonctionnalités IA. Cette collaboration vise à explorer de nouvelles façons d’utiliser l’IA pour améliorer l’apprentissage et l’évaluation, en mettant toujours l’étudiant au cœur du processus. Ensemble, nous allons travailler sur différents scénarios pédagogiques où l’IA peut apporter une valeur ajoutée, tant du côté des étudiants que des enseignants.

Par exemple, nous explorerons comment l’IA peut aider les enseignants à analyser les activités des étudiants, à évaluer leurs travaux de manière plus efficace et objective, ou encore à identifier rapidement les étudiants en difficulté. L’un des aspects les plus intéressants de cette collaboration est l’implication d’un laboratoire de recherche qui suivra les différentes expérimentations. Les chercheurs analyseront l’impact et l’efficacité de ces assistants IA dans un contexte pédagogique réel. Leurs retours nous permettront d’affiner continuellement nos outils pour maximiser leur pertinence et leur utilité. Cette collaboration s’inscrit dans notre vision à long terme d’une IA au service de la pédagogie, utilisée de manière éthique et responsable pour enrichir l’expérience d’apprentissage de chaque étudiant. Nous sommes convaincus que l’IA, lorsqu’elle est développée et déployée en étroite collaboration avec les acteurs du monde éducatif, a le potentiel de révolutionner la façon dont nous apprenons et enseignons.

Ludovic Charbonnel

co-fondateur ChallengeMe